leads4pass has updated Amazon SAA-C02 dumps issues! The latest SAA-C02 exam questions can help you pass the exam! All questions are corrected

to ensure authenticity and effectiveness! Download the leads4pass SAA-C02 dumps (Total Questions: 439 Q&A SAA-C02 Dumps)

Examineeverything Exam Table of Contents:

- Latest Amazon SAA-C02 google drive

- Effective Amazon SAA-C02 Practice testing questions

- leads4pass Year-round Discount Code

- What are the advantages of leads4pass?

Latest Amazon SAA-C02 google drive

[Latest PDF] Free Amazon SAA-C02 pdf dumps download from Google Drive: https://drive.google.com/file/d/1puTG8aVJOZjo3QqM5mL8epeiQQjAP3nV/

Share Amazon SAA-C02 practice test for free

QUESTION 1

A company runs an application in a branch office within a small data closet with no virtualized compute resources. The

application data is stored on an NFS volume. Compliance standards require a daily offsite backup of the NFS volume.

Which solution meet these requirements?

A. Install an AWS Storage Gateway file gateway on-premises to replicate the data to Amazon S3.

B. Install an AWS Storage Gateway file gateway hardware appliance on-premises to replicate the data to Amazon S3.

C. Install an AWS Storage Gateway volume gateway with stored volumes on-premises to replicate the data to Amazon

S3.

D. Install an AWS Storage Gateway volume gateway with cached volumes on-premises to replicate the data to Amazon

S3.

Correct Answer: A

AWS Storage Gateway Hardware Appliance

Hardware Appliance

Storage Gateway is available as a hardware appliance, adding to the existing support for VMware ESXi, Microsoft

Hyper-V, and Amazon EC2. This means that you can now make use of Storage Gateway in situations where you do not

have a virtualized environment, server-class hardware or IT staff with the specialized skills that are needed to manage

them. You can order appliances from Amazon.com for delivery to branch offices, warehouses, and “outpost” offices

that lack dedicated IT resources. Setup (as you will see in a minute) is quick and easy, and gives you access to three

storage solutions:

File Gateway – A file interface to Amazon S3, accessible via NFS or SMB. The files are stored as S3 objects, allowing

you to make use of specialized S3 features such as lifecycle management and cross-region replication. You can trigger

AWS Lambda functions, run Amazon Athena queries, and use Amazon Macie to discover and classify sensitive data.

Reference: https://aws.amazon.com/blogs/aws/new-aws-storage-gateway-hardware-appliance/

https://aws.amazon.com/storagegateway/file/

QUESTION 2

A solutions architect is implementing a document review application using an Amazon S3 bucket for storage The solution must prevent accidental deletion of the documents and ensure that all versions of the documents are available

Users must be able to download, modify, and upload documents Which combination of actions should be taken to meet

these requirements\\’? (Select TWO )

A. Enable a read-only bucket ACL

B. Enable versioning on the bucket

C. Attach a 1 AM policy to the bucket

D. Enable MFA Delete on the bucket

E. Encrypt the bucket using AWS KMS

Correct Answer: BD

Object Versioning

Use Amazon S3 Versioning to keep multiple versions of an object in one bucket. For example, you could store myimage.jpg (version 111111) and my-image.jpg (version 222222) in a single bucket. S3 Versioning protects you from the

consequences of unintended overwrite and deletions. You can also use it to archive objects so that you have access to

previous versions.

To customize your data retention approach and control storage costs, use object versioning with Object lifecycle

management. For information about creating S3 Lifecycle policies using the AWS Management Console, see How Do I

Create a Lifecycle Policy for an S3 Bucket? in the Amazon Simple Storage Service Console User Guide.

If you have an object expiration lifecycle policy in your non-versioned bucket and you want to maintain the same

permanent delete behavior when you enable versioning, you must add a noncurrent expiration policy. The noncurrent

expiration lifecycle policy will manage the deletes of the noncurrent object versions in the version-enabled bucket. (A

version-enabled bucket maintains one current and zero or more noncurrent object versions.)

You must explicitly enable S3 Versioning on your bucket. By default, S3 Versioning is disabled. Regardless of whether

you have enabled Versioning, each object in your bucket has a version ID. If you have not enabled Versioning, Amazon

S3 sets the value of the version ID to null. If S3 Versioning is enabled, Amazon S3 assigns a version ID value for the

object. This value distinguishes it from other versions of the same key.

Enabling and suspending versioning is done at the bucket level. When you enable versioning on an existing bucket,

objects that are already stored in the bucket are unchanged. The version IDs (null), contents, and permissions remain

the same. After you enable S3 Versioning for a bucket, each object that is added to the bucket gets a version ID, which

distinguishes it from other versions of the same key.

Only Amazon S3 generates version IDs, and they can\\’t be edited. Version IDs are Unicode, UTF-8 encoded, URLready, opaque strings that are no more than 1,024 bytes long. The following is an example: 3/L4kqtJlcpXroDTDmJ

+rmSpXd3dIbrHY+MTRCxf3vjVBH40Nr8X8gdRQBpUMLUo.

Using MFA delete

If a bucket\\’s versioning configuration is MFA Delete–enabled, the bucket owner must include the x-amz-MFA request

header in requests to permanently delete an object version or change the versioning state of the bucket. Requests that

include x-amz-MFA must use HTTPS. The header\\’s value is the concatenation of your authentication device\\’s serial

number, space, and the authentication code displayed on it. If you do not include this request header, the request

fails.

Reference: https://aws.amazon.com/s3/features/

https://docs.aws.amazon.com/AmazonS3/latest/dev/ObjectVersioning.html

https://docs.aws.amazon.com/AmazonS3/latest/dev/UsingMFADelete.html

QUESTION 3

A company is looking for a solution that can store video archives in AWS from old news footage. The company needs to

minimize costs and will rarely need to restore these files. When the files are needed, they must be available in a

maximum of five minutes.

What is the MOST cost-effective solution?

A. Store the video archives in Amazon S3 Glacier and use Expedited retrievals.

B. Store the video archives in Amazon S3 Glacier and use Standard retrievals.

C. Store the video archives in Amazon S3 Standard-Infrequent Access (S3 Standard-IA).

D. Store the video archives in Amazon S3 One Zone-Infrequent Access (S3 One Zone-IA).

Correct Answer: A

QUESTION 4

An application requires a development environment (DEV) and the production environment (PROD) for several years. The

DEV instances will run for 10 hours each day during normal business hours, while the PROD instances will run 24 hours

each day. A solutions architect needs to determine a compute instance purchase strategy to minimize costs.

Which solution is the MOST cost-effective?

A. DEV with Spot Instances and PROD with On-Demand Instances

B. DEV with On-Demand Instances and PROD with Spot Instances

C. DEV with Scheduled Reserved Instances and PROD with Reserved Instances

D. DEV with On-Demand Instances and PROD with Scheduled Reserved Instances

Correct Answer: D

QUESTION 5

A company runs a web service on Amazon EC2 instances behind an Application Load Balancer The instances run in an

Amazon EC2 Auto Scaling group across two Availability Zones The company needs a minimum of four instances at all

limes to meet the required service level agreement (SLA) while keeping costs low.

If an Availability Zone fails, how can the company remain compliant with the SLA?

A. Add a target tracking scaling policy with a short cooldown period

B. Change the Auto Scaling group launch configuration to use a larger instance type

C. Change the Auto Scaling group to use six servers across three Availability Zones

D. Change the Auto Scaling group to use eight servers across two Availability Zones

Correct Answer: D

QUESTION 6

A company has a two-tier application architecture that runs in public and private subnets Amazon EC2 instances

running the web application is in the public subnet and a database runs on the private subnet The web application

instances

and the database is running in a single Availability Zone (AZ).

Which combination of steps should a solutions architect take to provide high availability for this architecture? (Select

TWO.)

A. Create new public and private subnets in the same AZ for high availability

B. Create an Amazon EC2 Auto Scaling group and Application Load Balancer spanning multiple AZs

C. Add the existing web application instances to an Auto Scaling group behind an Application Load Balancer

D. Create new public and private subnets in a new AZ Create a database using Amazon EC2 in one AZ

E. Create new public and private subnets in the same VPC each in a new AZ Migrate the database to an Amazon RDS

multi-AZ deployment

Correct Answer: BE

You can take advantage of the safety and reliability of geographic redundancy by spanning your Auto Scaling group

across multiple Availability Zones within a Region and then attaching a load balancer to distribute incoming traffic

across

those zones. Incoming traffic is distributed equally across all Availability Zones enabled for your load balancer.

Note.

An Auto Scaling group can contain Amazon EC2 instances from multiple Availability Zones within the same region.

However, an Auto Scaling group can\\’t contain instances from multiple Regions. When one Availability Zone becomes

unhealthy or unavailable, Amazon EC2 Auto Scaling launches new instances in an unaffected zone. When the

unhealthy Availability Zone returns to a healthy state, Amazon EC2 Auto Scaling automatically redistributes the

application

instances evenly across all of the zones for your Auto Scaling group. Amazon EC2 Auto Scaling does this by attempting

to launch new instances in the Availability Zone with the fewest instances. If the attempt fails, however, Amazon EC2

Auto Scaling attempts to launch in other Availability Zones until it succeeds. You can expand the availability of your

scaled and load-balanced application by adding an Availability Zone to your Auto Scaling group and then enabling that

zone

for your load balancer. After you\\’ve enabled the new Availability Zone, the load balancer begins to route traffic equally

among all the enabled zones.

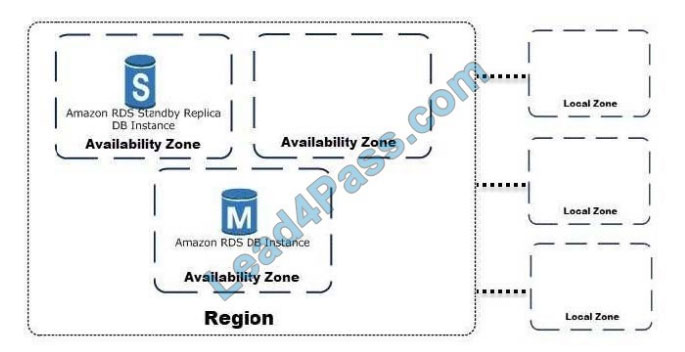

High Availability (Multi-AZ) for Amazon RDS

Amazon RDS provides high availability and failover support for DB instances using Multi-AZ deployments. Amazon RDS

uses several different technologies to provide failover support. Multi-AZ deployments for MariaDB, MySQL, Oracle, and

PostgreSQL DB instances use Amazon\\’s failover technology. SQL Server DB instances use SQL Server Database

Mirroring (DBM) or Always On Availability Groups (AGs). In a Multi-AZ deployment, Amazon RDS automatically

provisions

and maintains a synchronous standby replica in a different Availability Zone. The primary DB instance is synchronously

replicated across Availability Zones to a standby replica to provide data redundancy, eliminate I/O freezes, and

minimize

latency spikes during system backups. Running a DB instance with high availability can enhance availability during

planned system maintenance, and help protect your databases against DB instance failure and Availability Zone

disruption.

For more information on Availability Zones, see Regions, Availability Zones, and Local Zones

https://docs.aws.amazon.com/autoscaling/ec2/userguide/as-add-availability-zone.html

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/Concepts.MultiAZ.html

QUESTION 7

A solutions architect is designing a multi-region disaster recovery solution for an application that will provide public API

access. The application will use Amazon EC2 instances with a user-data script to load application code and an Amazon

RDS for MySQL database The Recovery Time Objective (RTO) is 3 hours and the Recovery Point Objective (RPO) is

24 hours. Which architecture would meet these requirements at the LOWEST cost?

A. Use an Application Load Balancer for Region failover Deploy new EC2 instances with the user data script Deploy

separate RDS instances in each Region

B. Use Amazon Route 53 for Region failover Deploy new EC2 instances with the user data script Create a read replica

of the RDS instance in a backup Region

C. Use Amazon API Gateway for the public APIs and Region failover Deploy new EC2 instances with the user data

script Create a MySQL read replica of the RDS instance in a backup Region

D. Use Amazon Route 53 for Region failover Deploy new EC2 instances with the user data scnpt for APIs and create a

snapshot of the RDS instance daily for a backup Replicate the snapshot to a backup Region

Correct Answer: C

QUESTION 8

A recent analysis of a company\\’s IT expenses highlights the need to reduce backup costs. The company\\’s chief

information officer wants to simplify the on-premises backup infrastructure and reduce costs by eliminating the use of

physical backup tapes. The company must preserve the existing investment in the on-premises backup applications and

workflows.

What should a solutions architect recommend?

A. Set up AWS Storage Gateway to connect with the backup applications using the NFS interface.

B. Set up an Amazon EFS file system that connects with the backup applications using the NFS interface

C. Set up an Amazon EFS file system that connects with the backup applications using the iSCSI interface

D. Set up AWS Storage Gateway to connect with the backup applications using the iSCSI-virtual tape library (VTL)

interface.

Correct Answer: A

QUESTION 9

A company hosts its core network services, including directory services and DNS, in its promises data center. The

data center is connected to the AWS Cloud using AWS Direct Connect (DX). Additional AWS accounts are planned that

will require quick, cost-effective, and consistent access to these network services.

What should a solutions architect implement to meet these requirements with the LEAST amount of operational

overhead?

A. Create a DX connection in each new account. Route the network traffic to the on-premises servers.

B. Configure VPC endpoints in the DX VPC for all required services. Route the network traffic to the on-premises

servers.

C. Create a VPN connection between each new account and the DX VPC. Route the network traffic to the on-premises

servers.

D. Configure AWS Transit Gateway between the accounts. Assigns DX to the transit gateway and route network traffic

to the on-premises servers.

Correct Answer: A

QUESTION 10

A web application runs on Amazon EC2 instances behind an Application Load Balancer. The application allows users to

create custom reports of historical weather data. Generating a report can take up to 5 minutes. These long-running

requests use many of the available incoming connections, making the system unresponsive to other users.

How can a solutions architect make the system more responsive?

A. Use Amazon SQS with AWS Lambda to generate reports.

B. Increase the idle timeout on the Application Load Balancer to 5 minutes.

C. Update the client-side application code to increase its request timeout to 5 minutes.

D. Publish the reports to Amazon S3 and use Amazon CloudFront for downloading to the user.

Correct Answer: A

QUESTION 11

A solutions architect is designing a solution to access a catalog of images and provide users with the ability to submit

requests to customize images Image customization parameters will be in any request sent to an AWS API Gateway API

The customized image will be generated on demand, and users will receive a link they can click to view or download

their customized image The solution must be highly available for viewing and customizing images What is the MOST

cost-effective solution to meet these requirements?

A. Use Amazon EC2 instances to manipulate the original image into the requested customization Store the original and

manipulated images in Amazon S3 Configure an Elastic Load Balancer in front of the EC2 instances

B. Use AWS Lambda to manipulate the original image to the requested customization Store the original and

manipulated images in Amazon S3 Configure an Amazon CloudFront distribution with the S3 bucket as the origin

C. Use AWS Lambda to manipulate the original image to the requested customization Store the

D. Use Amazon EC2 instances to manipulate the original image into the requested customization Store the original

images in Amazon S3 and the manipulated images in Amazon DynamoDB Configure an Amazon CloudFront

distribution with the S3 bucket as the origin

Correct Answer: B

AWS Lambda is a compute service that lets you run code without provisioning or managing servers. AWS Lambda

executes your code only when needed and scales automatically, from a few requests per day to thousands per second.

You pay only for the compute time you consume – there is no charge when your code is not running. With AWS

Lambda, you can run code for virtually any type of application or backend service – all with zero administration. AWS

Lambda runs your code on a high-availability compute infrastructure and performs all of the administration of the

compute resources, including server and operating system maintenance, capacity provisioning and automatic scaling,

code monitoring, and logging. All you need to do is supply your code in one of the languages that AWS Lambda

supports.

Storing your static content with S3 provides a lot of advantages. But to help optimize your application\\’s performance

and security while effectively managing cost, we recommend that you also set up Amazon CloudFront to work with your

S3 bucket to serve and protect the content. CloudFront is a content delivery network (CDN) service that delivers static

and dynamic web content, video streams, and APIs around the world, securely and at scale. By design, delivering data

out of CloudFront can be more cost-effective than delivering it from S3 directly to your users.

CloudFront serves content through a worldwide network of data centers called Edge Locations. Using edge servers to

cache and serve content improves performance by providing content closer to where viewers are located. CloudFront

has edge servers in locations all around the world.

Reference: https://docs.aws.amazon.com/lambda/latest/dg/welcome.html https://aws.amazon.com/blogs/networkingand-content-delivery/amazon-s3-amazon-cloudfront-a-match-made-in-the-cloud/

QUESTION 12

A company must migrate 20 TB of data from a data center to the AWS Cloud within 30 days. The company\\’s network

bandwidth is limited to 15 Mbps and cannot exceed 70% utilization. What should a solutions architect do to meet these

requirements?

A. Use AWS Snowball.

B. Use AWS DataSync.

C. Use a secure VPN connection.

D. Use Amazon S3 Transfer Acceleration.

Correct Answer: A

QUESTION 13

A company hosts an application used to upload files to an Amazon S3 bucket Once uploaded, the files are processed to

extract metadata, which takes less than 5 seconds. The volume and frequency of the uploads vanes from a few files

each hour to hundreds of concurrent uploads. The company has asked a solutions architect to design a cost-effective

architecture that will meet these requirements.

What should the solutions architect recommend?

A. Configure AWS CloudTrail trails to log S3 API calls Use AWS AppSync to process the files

B. Configure an object-created event notification within the S3 bucket to invoke an AWS Lambda function to process the

files.

C. Configure Amazon Kinesis Data Streams to process and send data to Amazon S3 Invoke an AWS Lambda function

to process the files

D. Configure an Amazon Simple Notification Service (Amazon SNS) topic to process the files uploaded to Amazon S3.

Invoke an AWS Lambda function to process the files.

Correct Answer: D

Latest leads4pass Amazon dumps Discount Code 2020

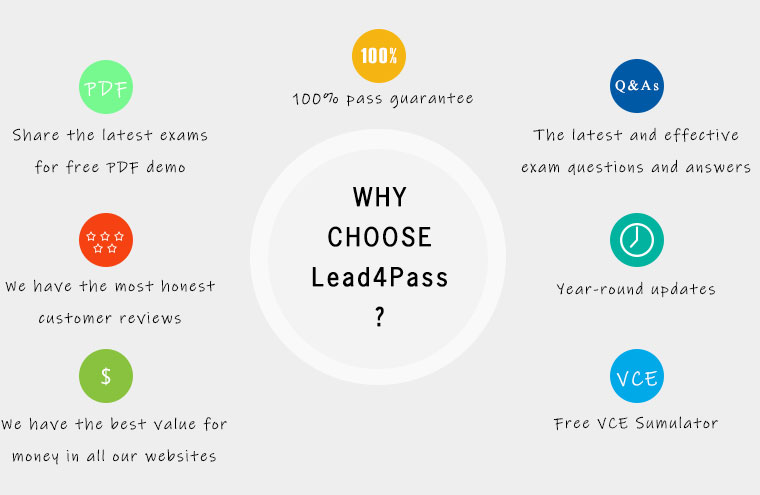

About The leads4pass Dumps Advantage

leads4pass has 7 years of exam experience! A number of professional Amazon exam experts! Update exam questions throughout the year! The most complete exam questions and answers! The safest buying experience! The greatest free sharing of exam practice questions and answers!

Our goal is to help more people pass the Amazon exam! Exams are a part of life, but they are important!

In the study, you need to sum up the study! Trust leads4pass to help you pass the exam 100%!

Summarize:

This blog shares the latest Amazon SAA-C02 exam dumps, SAA-C02 exam questions and answers! SAA-C02 pdf, SAA-C02 exam video!

You can also practice the test online! leads4pass is the industry leader!

Select leads4pass SAA-C02 exams Pass Amazon SAA-C02 exams “AWS Certified Solutions Architect – Associate (SAA-C02)”. Help you successfully pass the SAA-C02 exam.

ps.

Latest update leads4pass SAA-C02 exam dumps: https://www.leads4pass.com/saa-c02.html (439 Q&As)

[Q1-Q12 PDF] Free Amazon SAA-C02 pdf dumps download from Google Drive: https://drive.google.com/file/d/1puTG8aVJOZjo3QqM5mL8epeiQQjAP3nV/